Docker for local web development, part 4: smoothing things out with Bash

Last updated: 2022-02-19 :: Published: 2020-04-13 :: [ history ]You can also subscribe to the RSS or Atom feed, or follow me on Twitter.

In this series

- Introduction: why should you care?

- Part 1: a basic LEMP stack

- Part 2: put your images on a diet

- Part 3: a three-tier architecture with frameworks

- Part 4: smoothing things out with Bash ⬅️ you are here

- Part 5: HTTPS all the things

- Part 6: expose a local container to the Internet

- Part 7: using a multi-stage build to introduce a worker

- Part 8: scheduled tasks

- Conclusion: where to go from here

Subscribe to email alerts at the end of this article or follow me on Twitter to be informed of new publications.

In this post

Introduction

As our development environment is taking shape, the number of commands we need to remember starts to build up. Here are a few of them, as a reminder:

docker compose up -dto start the containers;docker compose logs -f nginxto watch the logs of Nginx;docker compose exec backend php artisanto run Artisan commands (or withrun --rmif the container isn't running);docker compose exec frontend yarnto run Yarn commands (ditto);- etc.

Clearly, none of the examples above is impossible to remember and, with some practice, anyone would eventually know them by heart. Yet that is a lot of text to type, repeatedly, and if you haven't used a specific command for a while, looking for the right syntax can end up taking a significant amount of time.

Moreover, the scope of this tutorial series is rather limited; in practice, you're likely to deal with projects much more complex than this, requiring many more commands.

There is little point in implementing an environment that ends up increasing the developer's mental load. Thankfully, there is a great tool out there that can help us mitigate this issue, one you've probably at least heard of and that is present pretty much everywhere: Bash. With little effort, Bash will allow us to add a layer on top of Docker to abstract away most of the complexity, and introduce a standardised, user-friendly interface instead.

The assumed starting point of this tutorial is where we left things at the end of the previous part, corresponding to the repository's part-3 branch.

If you prefer, you can also directly checkout the part-4 branch, which is the final result of today's article.

Bash?

Bash has been around since 1989, meaning it's pretty much as old as the Internet as we know it. It is essentially a command processor (a shell), executing commands either typed in a terminal or read from a file (a shell script).

Bash allows its users to automate and perform a great variety of tasks, which I am not even going to try and list. What's important to know in the context of this series, is that it can run pretty much everything a human usually types in a terminal, that it is natively present on Unix systems (Linux and macOS, and Windows via WSL 2).

Its flexibility and portability makes it an ideal candidate for what we want to achieve today. Let's dig in!

The application menu

For starters, let's create a file named demo at the root of our project (alongside docker-compose.yml) and give it execution permissions:

$ touch demo

$ chmod +x demo

This file will contain the Bash script allowing us to interact with the application.

Open it and add the following line at the very top:

#!/bin/bash

This is just to indicate that Bash shall be the interpreter of our script, and where to find it (/bin/bash is the standard location on just about every Unix system, and also on Windows' Git Bash).

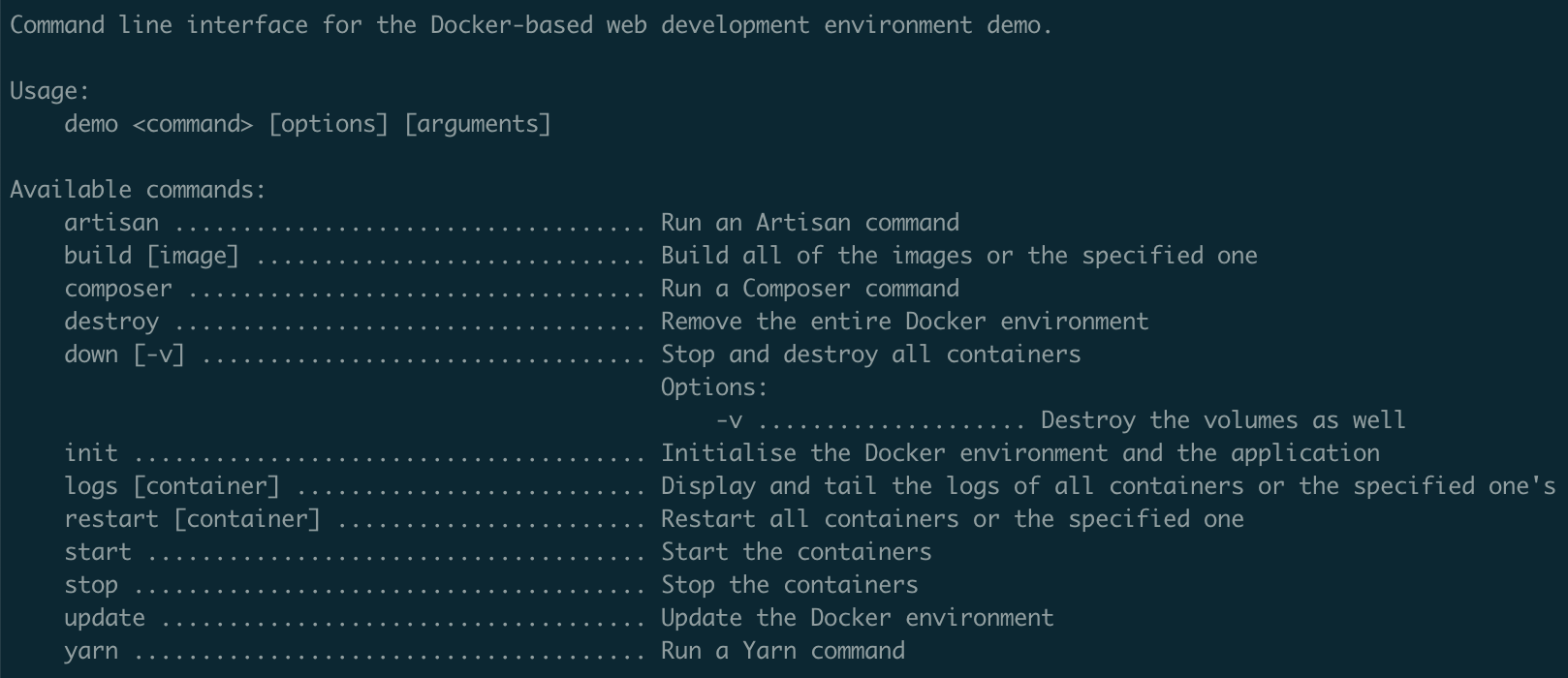

The first thing we want to do is to create a menu for our interface, listing the available commands and how to use them.

Update the content of the file with the following:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 | #!/bin/bash

case "$1" in

*)

cat << EOF

Command line interface for the Docker-based web development environment demo.

Usage:

demo <command> [options] [arguments]

Available commands:

artisan ................................... Run an Artisan command

build [image] ............................. Build all of the images or the specified one

composer .................................. Run a Composer command

destroy ................................... Remove the entire Docker environment

down [-v] ................................. Stop and destroy all containers

Options:

-v .................... Destroy the volumes as well

init ...................................... Initialise the Docker environment and the application

logs [container] .......................... Display and tail the logs of all containers or the specified one's

restart ................................... Restart the containers

start ..................................... Start the containers

stop ...................................... Stop the containers

update .................................... Update the Docker environment

yarn ...................................... Run a Yarn command

EOF

exit 1

;;

esac

|

case (sometimes known as switch or match in other programming languages) is a basic control structure allowing us to do different things based on the value of $1, $1 being the first parameter passed on to the demo script.

For example, with the following command, $1 would contain the string unicorn:

$ demo unicorn

For now, we only address the default case, which is represented by *. In other words, if we call our script without any parameter, or one whose value is not a specific case of the switch, the menu will be displayed.

We now need to make this script available from anywhere in a terminal. To do so, add the following function to your local .bashrc file (or .zshrc, or anything else according to your configuration):

1 2 3 4 | function demo {

cd /PATH/TO/YOUR/PROJECT && bash demo $*

cd -

}

|

Wait. What? Each time you open a new terminal window, Bash will try and read the content of some files, if it can find them. These files contain commands and instructions you basically want Bash to run at start-up, such as updating the $PATH variable, running a script somewhere or, in our case, make a function available globally. Different files can be used, but to keep it simple we'll stick to updating or creating the .bashrc file in your home folder, and add the demo function above to it:

$ vi ~/.bashrcFrom then on, everytime you open a terminal window, this file will be read and the demo function made available globally. This will work whatever your operating system is (including Windows, as long as you do this from Git Bash, or from your terminal of choice).

Make sure to replace /PATH/TO/YOUR/PROJECT with the absolute path of the project's root (if you are unsure what that is, run pwd from the folder where the docker-compose.yml file sits and copy and paste the result). The function essentially changes the current directory for the project's root (cd /PATH/TO/YOUR/PROJECT) and executes the demo script using Bash (bash demo), passing on all of the command parameters to it ($*), which are basically all of the characters found after demo.

For example, if you'd type:

$ demo I am a teapot

This is what the function would do behind the scenes:

$ cd /PATH/TO/YOUR/PROJECT && bash demo I am a teapot

The last instruction of the function (cd -) simply changes the current directory back to the previous one. In other words, you can run demo from anywhere – you will always be taken back to the directory the command was initially run from.

Before we move on, there's something else we need to add to this file:

export HOST_UID=$(id -u)

Remember when we set a non-root user in the previous part to avoid file permission issues? We had to pass the host machine's current user ID to the docker-compose.yml file, and we did so using the .env file at the root of the project.

The line above allows us to export that value as an environment variable instead, that is directly accessible by the docker-compose.yml file.

That means you can now remove this line from the .env file (your value for HOST_UID might be a different one):

HOST_UID=501

This export will automatically happen any time you open a terminal window, meaning there is no need to manually set the user ID anymore.

Save the changes and open a new terminal window or source the file for them to take effect:

$ source ~/.bashrc

source will essentially load the content of the sourced file into the current shell, without having to restart it entirely.

Let's display our menu:

$ demo

If all went well, you should see something similar to this:

Looks fancy, doesn't it? Yet, so far none of these commands is doing anything. Let's fix this!

Windows users: watch out for CRLF line endings! Unix files and Windows files use different invisible characters for line endings – the former adds the LF character only, while the latter adds both CR and LF. Your Bash script won't work with the latter, so make sure you change the file's line endings for LF if necessary. If you are not sure how to proceed, simply search for your IDE followed by "line endings" online – most modern text editors offer an easy way to make the switch.

More generally, it is a good practice to create a .gitattributes file at the root of your project containing the line * text=auto, which will automatically convert line endings at checkout and commit (see here).

Basic commands

We will start with a simple command, to give you a taste. Update the switch in the demo file so it looks like this:

1 2 3 4 5 6 7 | case "$1" in

start)

start

;;

*)

cat << EOF

...

|

We've added the start case, in which we call the start function without any parameters. That function doesn't exist yet – at the top of the file, under #!/bin/bash, add the following code:

1 2 3 4 | # Create and start the containers and volumes

start () {

docker compose up -d

}

|

This short function simply runs the now familiar docker compose up -d, which starts the containers in the background. Notice that we don't need to change the current directory, as when we invoke the demo function, we are automatically taken to the folder where the demo file is, which is also where docker-compose.yml resides.

Save the file and try out the new command (it doesn't matter whether the containers are already running):

$ demo start

That's it! You can now start your project from anywhere in a terminal using the command above, which is much simpler to type and remember than docker compose up -d.

Let's give this another go, this time to display the logs. Add another case to the structure:

1 2 3 4 5 6 7 8 9 10 | case "$1" in

logs)

logs

;;

start)

start

;;

*)

cat << EOF

...

|

And the corresponding function:

1 2 3 4 | # Display and tail the logs

logs () {

docker compose logs -f

}

|

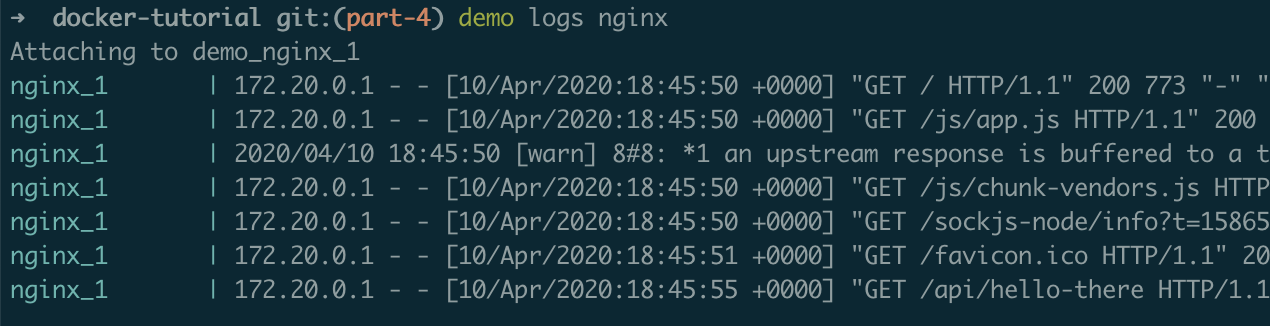

Try it out:

$ demo logs

You now have a shortcut command to access the containers' logs easily. That's nice, but how about displaying the logs of a specific container?

Let's modify the case slightly:

1 2 3 4 5 6 7 8 9 10 | case "$1" in

logs)

logs "${@:2}"

;;

start)

start

;;

*)

cat << EOF

...

|

Instead of directly calling the logs function, we are now also passing on the script's parameters to it, starting from the second one, if any (that's the "${@:2}" bit). The reason is that when we type demo logs nginx, the first parameter of the script is logs, and we only want to pass on nginx to the start function.

Update the logs function accordingly:

1 2 3 4 | # Display and tail the logs

logs () {

docker compose logs -f "${@:1}"

}

|

Using the same syntax, we append the function's parameters to the command if any, starting from the first one ("${@:1}") .

Save the file again and give it a try:

$ demo logs nginx

Now that you get the principle, and as most of the other functions work in a similar fashion, here is the rest of the file, with some block comments to make it more readable:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 101 102 103 104 105 106 107 108 109 110 111 112 113 114 115 116 117 118 119 120 121 122 123 | #!/bin/bash

#######################################

# FUNCTIONS

#######################################

# Run an Artisan command

artisan () {

docker compose run --rm backend php artisan "${@:1}"

}

# Build all of the images or the specified one

build () {

docker compose build "${@:1}"

}

# Run a Composer command

composer () {

docker compose run --rm backend composer "${@:1}"

}

# Remove the entire Docker environment

destroy () {

read -p "This will delete containers, volumes and images. Are you sure? [y/N]: " -r

if [[ ! $REPLY =~ ^[Yy]$ ]]; then exit; fi

docker compose down -v --rmi all --remove-orphans

}

# Stop and destroy all containers

down () {

docker compose down "${@:1}"

}

# Display and tail the logs of all containers or the specified one's

logs () {

docker compose logs -f "${@:1}"

}

# Restart the containers

restart () {

stop && start

}

# Start the containers

start () {

docker compose up -d

}

# Stop the containers

stop () {

docker compose stop

}

# Run a Yarn command

yarn () {

docker compose run --rm frontend yarn "${@:1}"

}

#######################################

# MENU

#######################################

case "$1" in

artisan)

artisan "${@:2}"

;;

build)

build "${@:2}"

;;

composer)

composer "${@:2}"

;;

destroy)

destroy

;;

down)

down "${@:2}"

;;

logs)

logs "${@:2}"

;;

restart)

restart

;;

start)

start

;;

stop)

stop

;;

yarn)

yarn "${@:2}"

;;

*)

cat << EOF

Command line interface for the Docker-based web development environment demo.

Usage:

demo <command> [options] [arguments]

Available commands:

artisan ................................... Run an Artisan command

build [image] ............................. Build all of the images or the specified one

composer .................................. Run a Composer command

destroy ................................... Remove the entire Docker environment

down [-v] ................................. Stop and destroy all containers

Options:

-v .................... Destroy the volumes as well

init ...................................... Initialise the Docker environment and the application

logs [container] .......................... Display and tail the logs of all containers or the specified one's

restart ................................... Restart the containers

start ..................................... Start the containers

stop ...................................... Stop the containers

update .................................... Update the Docker environment

yarn ...................................... Run a Yarn command

EOF

exit

;;

esac

|

Mind the fact that run --rm is used to execute Artisan, Composer and Yarn commands on the backend and frontend containers respectively, basically allowing us to do so whether the containers are running or not.

Also, you'll notice that the restart command is essentially a shortcut for demo stop followed by demo start, even though docker compose restart is also an option. The reason for that is that the latter is only truly useful to restart the containers' processes (e.g. Nginx, so it picks up a server configuration's changes, for instance). But say you've updated and rebuilt an image: running docker compose restart won't recreate the corresponding container based on the image's new version, but reuse the old container instead.

Essentially, in most cases stopping and starting (up) the containers is more likely to achieve the desired effect than a simple docker compose restart, even if it takes a few more seconds.

Finally, as the destroy function's job is to delete all of the containers, volumes and images, it would be quite a pain to run it by mistake, so I made it failsafe by adding a confirmation prompt.

Most of the commands are now covered, but you might have noticed that a couple of them are still missing: init and update. These are a bit special, so the next section is dedicated to them.

Initialising and updating the project

Let's take a step back for a minute. Imagine you've been given access to the project's repository in order to install it on your machine. The first thing you'd do is to clone it locally, and to add the demo function to .bashrc so you can interact with the application.

From there, you would still need to perform the following actions:

- Copy

.env.exampleto.envand complete the latter at the root of the project; - Do the same in

src/backend; - Download and build the images;

- Install the frontend's dependencies;

- Install the backend's dependencies;

- Run the backend's database migrations;

- Generate the backend's application key;

- Start the containers.

While the Bash layer facilitates going through that list, that's still quite some work to do in order to obtain a functional setup, and it would be easy to miss a step. What if you need to reset the environment? Or to install it on another developer's machine? Or guide a client through the process?

Thankfully, now that we've introduced Bash to the mix, automating the tasks above is fairly simple.

First, add the two missing cases to demo:

1 2 3 4 5 6 | init)

init

;;

update)

update

;;

|

And the corresponding functions (if your file is getting messy, you can also take a look at the final result here):

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 | # Create .env from .env.example

env () {

if [ ! -f .env ]; then

cp .env.example .env

fi

}

# Initialise the Docker environment and the application

init () {

env \

&& down -v \

&& build \

&& docker compose run --rm --entrypoint="//opt/files/init" backend \

&& yarn install \

&& start

}

# Update the Docker environment

update () {

git pull \

&& build \

&& composer install \

&& artisan migrate \

&& yarn install \

&& start

}

|

Let's have a look at init first, whose job is to initialise the whole project. The first thing the function does is to call another function, env, which we defined right above it, and which is responsible for creating the .env file as a copy of .env.example if it doesn't exist. Next thing init does is ensuring the containers and volumes are destroyed, as the aim is to be able to both initialise the project from scratch and reset it. It then builds the images (which will also be downloaded if necessary), and goes on with running some sort of script on the backend container. Finally, it installs the frontend's dependencies and starts the containers.

You will probably recognise most of the items from the list at the beginning of this section, but what are this init file and that entrypoint we are referring to?

Since the backend requires a bit more work, we can isolate the corresponding steps into a single script that we will mount on the container in order to run it there. This means we need to make a small addition to the backend service in docker-compose.yml:

# Backend Service

backend:

build:

context: ./src/backend

args:

HOST_UID: $HOST_UID

working_dir: /var/www/backend

volumes:

- ./src/backend:/var/www/backend

- ./.docker/backend/init:/opt/files/init

depends_on:

mysql:

condition: service_healthy

Since the script will be run on the container, we need Bash to be installed on it, which is not the case by default on Alpine. We need to update the backend's Dockerfile accordingly (in src/backend):

FROM php:8.1-fpm-alpine # Install extensions RUN docker-php-ext-install pdo_mysql bcmath opcache # Install Composer COPY --from=composer:latest /usr/bin/composer /usr/local/bin/composer # Configure PHP COPY .docker/php.ini $PHP_INI_DIR/conf.d/opcache.ini # Use the default development configuration RUN mv $PHP_INI_DIR/php.ini-development $PHP_INI_DIR/php.ini # Install Bash RUN apk --no-cache add bash # Create user based on provided user ID ARG HOST_UID RUN adduser --disabled-password --gecos "" --uid $HOST_UID demo # Switch to that user USER demo

Build the image:

$ demo build backend

Finally, let's create the init file, in the .docker/backend folder:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 | #!/bin/bash

# Install Composer dependencies

composer install -d "/var/www/backend"

# Deal with the .env file if necessary

if [ ! -f "/var/www/backend/.env" ]; then

# Create .env file

cat > "/var/www/backend/.env" << EOF

APP_NAME=demo

APP_ENV=local

APP_KEY=

APP_DEBUG=true

APP_URL=http://backend.demo.test

LOG_CHANNEL=single

DB_CONNECTION=mysql

DB_HOST=mysql

DB_PORT=3306

DB_DATABASE=demo

DB_USERNAME=root

DB_PASSWORD=root

BROADCAST_DRIVER=log

CACHE_DRIVER=file

QUEUE_CONNECTION=sync

SESSION_DRIVER=file

EOF

# Generate application key

php "/var/www/backend/artisan" key:generate --ansi

fi

# Database

php "/var/www/backend/artisan" migrate

|

Let's break this down a bit. First, we install the Composer dependencies, specifying the folder to run composer install from with the -d option. We then check whether there's already a .env file, and if there isn't, we create one with some pre-configured settings matching our Docker setup. Notice that we leave the APP_KEY environment variable empty; that is why we run the command to generate the Laravel application key right after creating the .env file. We then move on to setting up the database.

Just like the demo file at the beginning of this article, we need to make the init file executable:

$ chmod +x .docker/backend/init

By setting it as the entrypoint for the container we invoke, it will be the first and only script to be run before the container is destroyed.

You can try the command out straight away, regardless of the current state of your project:

$ demo init

At this point though, you've probably taken care of most of the steps covered by the script already (e.g. generating the .env files or installing the dependencies). If you wish to test the complete process, you can run demo destroy, and either delete the entire project and/or start afresh by cloning your own repository or this one (in the latter case, checkout the part-4 branch), without forgetting to update the function in .bashrc if the path has changed. Then run demo init again.

I personally find the experience of seeing the whole project setting itself up incredibly satisfactory, but maybe that's just me.

Why the double slash? You might have noticed that, in the init function, the path to the init file is preceded with a double slash:

... docker compose run --rm --entrypoint="//opt/files/init" backend ...

This is not a typo. For some reason, when there's only one slash Windows will prepend the current local path to the script's, consequently complaining that the file cannot be found (duh). Adding another slash prevents that behaviour, while being ignored on other platforms.

That leaves us with the update function, whose job is to make sure our environment is up to date:

1 2 3 4 5 6 7 8 9 | # Update the Docker environment

update () {

git pull \

&& build \

&& composer install \

&& artisan migrate \

&& yarn install \

&& start

}

|

This is a convenience method that will pull the repository, build the images in case the Dockerfiles have changed, make sure any change of dependency is applied and new migrations are run, and restart the containers that need it (i.e. whose image has changed).

Managing separate repositories As I mentioned before, in a regular setup you are more likely to have the Docker environment, the backend application and the frontend application in separate repositories. Bash can also help in this situation: assuming the src folder is git-ignored and the code is hosted on GitHub, a function pulling the applications' repositories could look like this:

# Clone or update the repositories

repositories () {

repos=(frontend backend)

cd src

for repo in "${repos[@]}";

do

git clone "git@github.com:username/${repo}.git" "$repo" || (cd "$repo" ; git pull ; cd ..) || true

done

cd ..

}

Conclusion

The aim of this series is to build a flexible environment that makes our lives easier. That means the user experience must be as slick as possible, and having to remember dozens of complicated commands doesn't quite fit the bill.

Bash is a simple yet powerful tool that, when combined with Docker, makes for a great developer experience. After today's article, it will be much simpler to interact with our environment, and if you happen to forget a command, a refresher is now just one demo away.

I kept things as simple as possible to avoid cluttering the post, but there is obviously much more you can get out of this duo. Many more commands can be simplified – just tailor the layer to your needs.

In the next part of this series, we will see how to generate a self-signed SSL/TLS certificate in order to bring HTTPS to our environment. Subscribe to email alerts below so you don't miss it, or follow me on Twitter where I will share my posts as soon as they are published.

You can also subscribe to the RSS or Atom feed, or follow me on Twitter.