Docker for local web development, part 7: using a multi-stage build to introduce a worker

Last updated: 2022-02-19 :: Published: 2020-05-25 :: [ history ]You can also subscribe to the RSS or Atom feed, or follow me on Twitter.

In this series

- Introduction: why should you care?

- Part 1: a basic LEMP stack

- Part 2: put your images on a diet

- Part 3: a three-tier architecture with frameworks

- Part 4: smoothing things out with Bash

- Part 5: HTTPS all the things

- Part 6: expose a local container to the Internet

- Part 7: using a multi-stage build to introduce a worker ⬅️ you are here

- Part 8: scheduled tasks

- Conclusion: where to go from here

Subscribe to email alerts at the end of this article or follow me on Twitter to be informed of new publications.

In this post

Introduction

No one likes slow websites.

Pages with higher response times have higher bounce rates, which translate into lower conversion rates. When your website relies on an API, you want that API to be fast – you don't want to feel like it's having a cup of tea with your request, before insisting that the response has another butter scone prior to sending it your way.

There are many ways to increase an API's responsivity, and one of them which is also the focus of today's article is the use of queues. Queues are basically to-do lists of tasks which, unlike flossing, will be completed eventually. What's important about those tasks – called jobs – is that they don't need to be performed during the lifecycle of the initial request.

Typical examples of such jobs include sending a welcome email, resizing an image, or computing some statistics – whatever the task is, there's no need to make the end user wait for it to be completed. Instead, the job is placed in a queue to be dealt with later, and a response is sent immediately to the client. In other words, the job is made asynchronous, resulting in a much faster response time.

Queued jobs are processed by what we call workers. Workers monitor queues and pick up jobs as they appear – they're a bit like cashiers at the supermarket, processing the content of trolleys as they come. And just like more cashiers can be called for backup when there's a sudden spike in customers, more workers can be added whenever the queues get filled up more quickly than they're emptied.

Finally, queues are essentially lists of messages that need to be stored in a database, which is sometimes referred to as a message broker. Redis is an excellent choice for this, for it's super fast (in-memory storage) and it offers data structures well suited to this kind of thing. It's also very easy to set up with Docker and plays nicely with Laravel, which is why we are going to use it today.

The assumed starting point of this tutorial is where we left things at the end of the previous part, corresponding to the repository's part-6 branch.

If you prefer, you can also directly checkout the part-7 branch, which is the final result of this article.

Installing Redis

Now that all of the characters have been introduced, it's time to get into the plot.

The first thing we need to do is to install the Redis extension for PHP, since it is not part of the pre-compiled ones. As this extension is a bit complicated to set up, we'll use a convenient script featured in the official PHP images' documentation, which makes it easy to install PHP extensions across Linux distributions.

Replace the content of the backend's Dockerfile with this one:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 | FROM php:8.1-fpm-alpine

# Import extension installer

COPY --from=mlocati/php-extension-installer /usr/bin/install-php-extensions /usr/bin/

# Install extensions

RUN install-php-extensions pdo_mysql bcmath opcache redis

# Install Composer

COPY --from=composer:latest /usr/bin/composer /usr/local/bin/composer

# Configure PHP

COPY .docker/php.ini $PHP_INI_DIR/conf.d/opcache.ini

# Use the default development configuration

RUN mv $PHP_INI_DIR/php.ini-development $PHP_INI_DIR/php.in

# Install extra packages

RUN apk --no-cache add bash mysql-client mariadb-connector-c-dev

# Create user based on provided user ID

ARG HOST_UID

RUN adduser --disabled-password --gecos "" --uid $HOST_UID demo

# Switch to that user

USER demo

|

Note that redis was added to the list of extensions.

Build the image:

$ demo build backend

Our next task is to run an instance of Redis. In accordance with the principle of running a single process per container, we'll create a dedicated service in docker-compose.yml, and since the official images include an Alpine version, that's what we are going to use:

1 2 3 4 5 6 | # Redis Service

redis:

image: redis:6-alpine

command: ["redis-server", "--appendonly", "yes"]

volumes:

- redisdata:/data

|

The image's default start-up command is redis-server with no option, but as per the documentation, it doesn't cover data persistence. In order to enable it, we need to set the appendonly option to yes, hence the command configuration setting, overriding the default one (this also shows you how to do this without using a Dockerfile).

For data persistence to be fully functional, we also need a volume, to be added at the bottom of the file:

# Volumes volumes: mysqldata: phpmyadmindata: redisdata:

Finally, as Redis is going to be used by the backend service, we need to ensure the former is started before the latter. Update the backend service's configuration:

# Backend Service

backend:

build:

context: ./src/backend

args:

HOST_UID: $HOST_UID

working_dir: /var/www/backend

volumes:

- ./src/backend:/var/www/backend

- ./.docker/backend/init:/opt/files/init

- ./.docker/nginx/certs:/usr/local/share/ca-certificates

depends_on:

mysql:

condition: service_healthy

redis:

condition: service_started

Save docker-compose.yml and start the project to download the new image and create the corresponding container and volume:

$ demo start

This will also recreate the backend container in order to use the updated image we built earlier – the one including the Redis extension.

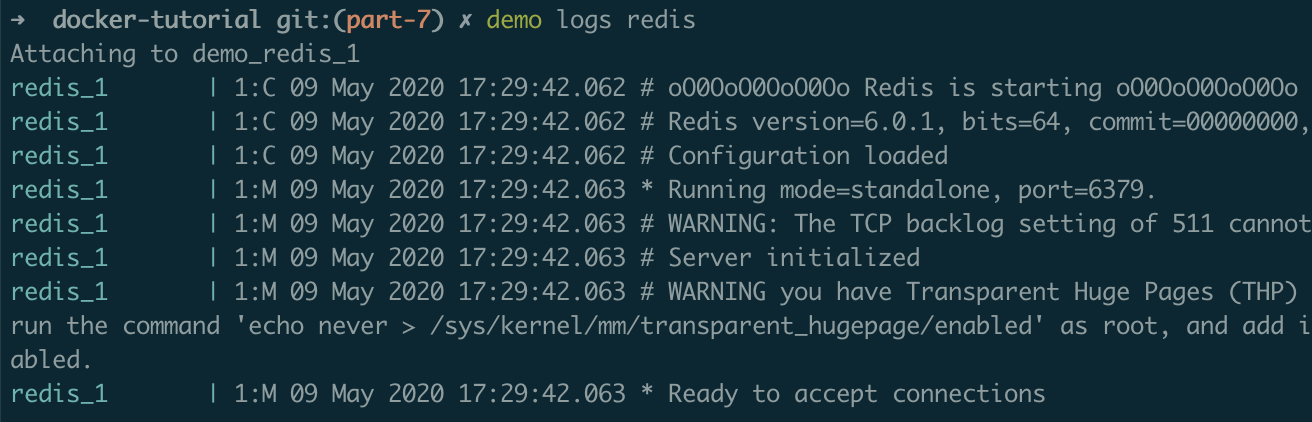

To make sure Redis is running properly, take a look at the logs:

$ demo logs redis

They should display something like this:

There's one last thing we need to do prior to creating our job: the backend application is currently set up to run the jobs immediately, and we need to tell it to queue them using Redis instead.

Open src/backend/.env, and spot the following line:

QUEUE_CONNECTION=sync

Replace it with these two lines:

QUEUE_CONNECTION=redis

REDIS_HOST=redis

That's all we need here, because the other parameters' default values are already the right ones (you can find them in src/backend/config/database.php).

Monitoring Redis If you want to use an external tool to access your Redis database, you can simply update the service's configuration in docker-compose.yml and add a ports section mapping your local machine's port 6379 to the container's:

...

ports:

- 6379:6379

...

From there, all you need to do is configure a database connection in your software of choice, setting localhost:6379 to access the Redis database while the container is running.

As pointed out by Utkarsh Vishnoi, you could also set up a new service to run Redis Commander, a bit like what we've done with phpMyAdmin.

The job

Laravel has built-in scaffolding tools we can use to create a job:

$ demo artisan make:job Time

This command will create a new Jobs folder in src/backend/app, containing a Time.php file. Open it and change the content of the handle method to this one:

1 2 3 4 5 6 7 8 9 10 11 | <?php // ignore this line, it's for syntax highlighting only

/**

* Execute the job.

*

* @return void

*/

public function handle()

{

\Log::info(sprintf('It is %s', date('g:i a T')));

}

|

All the job does is log the current time. The class already has all of the necessary traits to make it queueable, so there's no need to worry about that.

Laravel has a nice command scheduler we can use to define tasks that need to be executed periodically, with built-in helpers to manage queued jobs specifically.

Open the src/backend/app/Console/Kernel.php file and update the schedule method:

1 2 3 4 5 6 7 8 9 10 11 12 | <?php // ignore this line, it's for syntax highlighting only

/**

* Define the application's command schedule.

*

* @param \Illuminate\Console\Scheduling\Schedule $schedule

* @return void

*/

protected function schedule(Schedule $schedule)

{

$schedule->job(new \App\Jobs\Time)->everyMinute();

}

|

We essentially ask the scheduler to dispatch the Time job every minute.

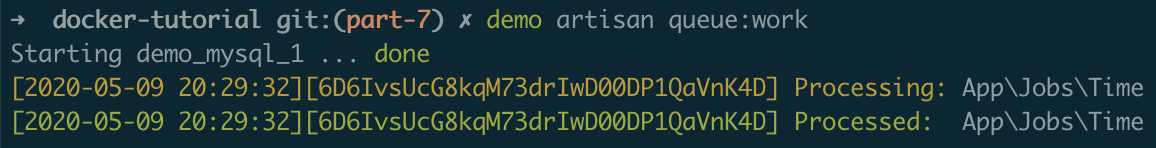

It won't do that by itself though, and needs to be started via an Artisan command. But before we run it, we'll start a queue worker manually, so we can see the jobs being processed in real time.

Open a new terminal window and run the following command:

$ demo artisan queue:work

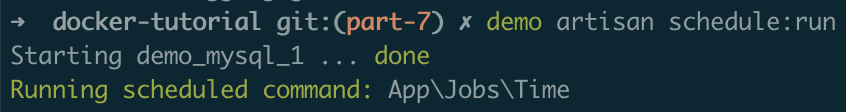

You can now go back to the first terminal window and run the scheduler:

$ demo artisan schedule:run

Which should display something like this:

If all went well, the other window should now show this:

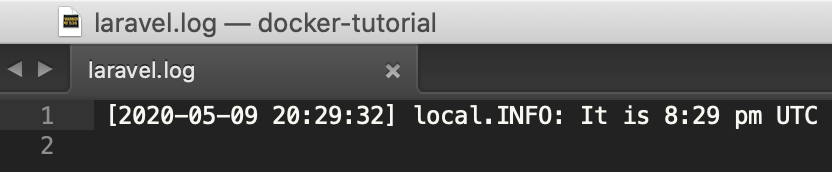

And if you open src/backend/storage/logs/laravel.log, you should see the new line which has been created by the job:

Our queue is operational! You can now close the worker's terminal window, which will also stop it.

This was just a test, however. We don't want to have to manually start the worker in a separate window every time we start our project – we need this to happen automatically.

A proper worker

This is where we're finally going to leverage multi-stage builds. The idea is basically to split the Dockerfile into different sections containing slightly different configurations, and which can be targeted individually to produce different images. Let's see what that means in practice.

Replace the content of src/backend/Dockerfile with this one (changes highlighted in bold):

FROM php:8.1-fpm-alpine as backend # Import extension installer COPY --from=mlocati/php-extension-installer /usr/bin/install-php-extensions /usr/bin/ # Install extensions RUN install-php-extensions bcmath pdo_mysql opcache redis # Install Composer COPY --from=composer:latest /usr/bin/composer /usr/local/bin/composer # Configure PHP COPY .docker/php.ini $PHP_INI_DIR/conf.d/opcache.ini # Use the default development configuration RUN mv $PHP_INI_DIR/php.ini-development $PHP_INI_DIR/php.ini # Install extra packages RUN apk --no-cache add bash mysql-client mariadb-connector-c-dev # Create user based on provided user ID ARG HOST_UID RUN adduser --disabled-password --gecos "" --uid $HOST_UID demo # Switch to that user USER demo FROM backend as worker # Start worker CMD ["php", "/var/www/backend/artisan", "queue:work"]

We now have two separate stages: backend and worker. The former is basically the original Dockerfile – we've simply named it backend using the as keyword at the very top:

FROM php:8.1-fpm-alpine as backend

The latter aims to describe the worker, and is based on the former:

1 2 3 4 | FROM backend as worker

# Start worker

CMD ["php", "/var/www/backend/artisan", "queue:work"]

|

All we do here is we reuse the backend stage almost as is, only overriding its default command by defining the queue:work Artisan command in its place. In other words, whenever a container is started for the worker stage, its running process will be the queue worker instead of PHP-FPM by default.

How do we start such a container? We first need to define a separate service in docker-compose.yml:

1 2 3 4 5 6 7 8 9 10 11 12 | # Worker Service

worker:

build:

context: ./src/backend

target: worker

args:

HOST_UID: $HOST_UID

working_dir: /var/www/backend

volumes:

- ./src/backend:/var/www/backend

depends_on:

- backend

|

This all looks familiar already, except for the build section which now has an extra property: target. This property allows us to specify which stage should be used as the base image for the service's containers.

We're almost done with docker-compose.yml – we just need to update the definition of the backend service to tell it to target the backend stage:

# Backend Service

backend:

build:

context: ./src/backend

target: backend

args:

HOST_UID: $HOST_UID

working_dir: /var/www/backend

volumes:

- ./src/backend:/var/www/backend

- ./.docker/backend/init:/opt/files/init

- ./.docker/nginx/certs:/usr/local/share/ca-certificates

depends_on:

mysql:

condition: service_healthy

redis:

condition: service_started

Save the file and build the corresponding images:

$ demo build backend

$ demo build worker

Start the project for the new images to be picked up:

$ demo start

Then run the scheduler again:

$ demo artisan schedule:run

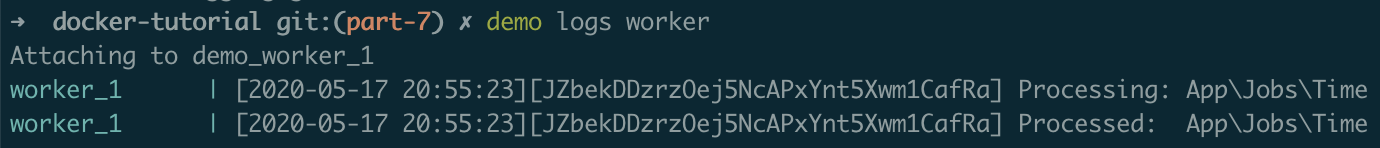

If all went well, the job should be scheduled and a new line should appear in src/backend/storage/laravel.log, while the worker's container logs display a couple of new lines:

$ demo logs worker

Your worker is now complete! It will run silently in the background every time you start your project, ready to process any job your application throws at it.

Updating the initialisation script

If you've been with me from the start and are using a Bash layer to manage your setup, all that's left to do is to update the backend's initialisation script so it uses Redis for queues by default.

The steps are very similar to what we did at the beginning of this article – open .docker/backend/init, and spot the following line:

QUEUE_CONNECTION=sync

Replace it with these two lines and save the file:

QUEUE_CONNECTION=redis

REDIS_HOST=redis

Done!

Conclusion

Multi-stage builds are a powerful tool of which this is a mere introduction. Each stage can refer to a different image, basically allowing maintainers to come up with all sorts of pipeline-like builds, where the tools used at each stage are discarded to only keep the final output in the resulting image. Think about that for a minute.

I also encourage you to check out these best practices, to make sure you're getting the most of your Dockerfiles.

Redis can also be used in ways that go beyond a simple message broker. You could for example use it as a local cache layer right now, instead of using Laravel's array or file drivers.

Finally, today's article leaves us with a couple of observations: the first one is that life is too short for flossing; the second is that so far we've been running the Laravel scheduler manually, although the documentation indicates a cron entry should be used for that. How do we fix this?

In the next part of this series, we will introduce a scheduler to run tasks periodically the Docker way, without using traditional cron jobs. Subscribe to email alerts below so you don't miss it, or follow me on Twitter where I will share my posts as soon as they are published.

You can also subscribe to the RSS or Atom feed, or follow me on Twitter.