Docker for local web development, part 3: a three-tier architecture with frameworks

Last updated: 2023-04-17 :: Published: 2020-03-30 :: [ history ]You can also subscribe to the RSS or Atom feed, or follow me on Twitter.

In this series

- Introduction: why should you care?

- Part 1: a basic LEMP stack

- Part 2: put your images on a diet

- Part 3: a three-tier architecture with frameworks ⬅️ you are here

- Part 4: smoothing things out with Bash

- Part 5: HTTPS all the things

- Part 6: expose a local container to the Internet

- Part 7: using a multi-stage build to introduce a worker

- Part 8: scheduled tasks

- Conclusion: where to go from here

Subscribe to email alerts at the end of this article or follow me on Twitter to be informed of new publications.

In this post

Foreword

In all honesty, what we've covered so far is pretty standard. Articles about LEMP stacks on Docker are legion, and while I hope to add some value through a beginner-friendly approach and a certain level of detail, there was hardly anything new (after all, I was already writing about this back in 2015).

I believe this is about to change with today's article. There are many ways to manage a multitiered project with Docker, and while the approach I am about to describe certainly isn't the only one, I also think this is a subject that doesn't get much coverage at all.

In that sense, today's article is probably where the rubber meets the road for some of you. That is not to say the previous ones are negligible – they constitute a necessary introduction contributing to making this series comprehensive – but this is where the theory meets the practical complexity of modern web applications.

The assumed starting point of this tutorial is where we left things at the end of the previous part, corresponding to the repository's part-2 branch.

If you prefer, you can also directly checkout the part-3 branch, which is the final result of today's article.

Again, this is by no means the one and only approach, just one that has been successful for me and the companies I set it up for.

A three-tier architecture?

After setting up a LEMP stack on Docker and shrinking down the size of the images, we are about to complement our MySQL database with a frontend application based on Vue.js and a backend application based on Laravel, in order to form what we call a three-tier architecture.

Behind this somewhat intimidating term is a popular way to structure an application, consisting in separating the presentation layer (a.k.a. the frontend), the application layer (a.k.a. the backend) and the persistence layer (a.k.a. the database), ensuring each part is independently maintainable, deployable, scalable, and easily replaceable if need be. Each of these layers represents one tier of the three-tier architecture. And that's it!

In such a setup, it is common for the backend and frontend applications to each have their own repository. For simplicity's sake, however, we'll stick to a single repository in this tutorial.

The backend application

Before anything else, let's get rid of the previous containers and volumes (not the images, as we still need them) by running the following command from the project's root directory:

$ docker compose down -v

Remember that down destroys the containers, and -v deletes the associated volumes.

Let's also get rid of the previous PHP-related files, to make room for the new backend application. Delete the .docker/php folder, the .docker/nginx/conf.d/php.conf file and the src/index.php file. Your file and directory structure should now look similar to this:

docker-tutorial/

├── .docker/

│ ├── mysql/

│ │ └── my.cnf

│ └── nginx/

│ └── conf.d/

│ └── phpmyadmin.conf

├── src/

├── .env

├── .env.example

├── .gitignore

└── docker-compose.yml

The new backend service

Replace the content of docker-compose.yml with this one (changes have been highlighted in bold):

version: '3.8'

# Services

services:

# Nginx Service

nginx:

image: nginx:1.21-alpine

ports:

- 80:80

volumes:

- ./src/backend:/var/www/backend

- ./.docker/nginx/conf.d:/etc/nginx/conf.d

- phpmyadmindata:/var/www/phpmyadmin

depends_on:

- backend

- phpmyadmin

# Backend Service

backend:

build: ./src/backend

working_dir: /var/www/backend

volumes:

- ./src/backend:/var/www/backend

depends_on:

mysql:

condition: service_healthy

# MySQL Service

mysql:

image: mysql/mysql-server:8.0

environment:

MYSQL_ROOT_PASSWORD: root

MYSQL_ROOT_HOST: "%"

MYSQL_DATABASE: demo

volumes:

- ./.docker/mysql/my.cnf:/etc/mysql/my.cnf

- mysqldata:/var/lib/mysql

healthcheck:

test: mysqladmin ping -h 127.0.0.1 -u root --password=$$MYSQL_ROOT_PASSWORD

interval: 5s

retries: 10

# PhpMyAdmin Service

phpmyadmin:

image: phpmyadmin/phpmyadmin:5-fpm-alpine

environment:

PMA_HOST: mysql

volumes:

- phpmyadmindata:/var/www/html

depends_on:

mysql:

condition: service_healthy

# Volumes

volumes:

mysqldata:

phpmyadmindata:

The main update is the removal of the PHP service in favour of the backend service, although they are quite similar. The build key now points to a Dockerfile located in the backend application's directory (src/backend), which is also mounted as a volume on the container.

As the backend application will be built with Laravel, let's create an Nginx server configuration based on the one provided in the official documentation. Create a new backend.conf file in .docker/nginx/conf.d:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 | server {

listen 80;

listen [::]:80;

server_name backend.demo.test;

root /var/www/backend/public;

add_header X-Frame-Options "SAMEORIGIN";

add_header X-Content-Type-Options "nosniff";

index index.php;

charset utf-8;

location / {

try_files $uri $uri/ /index.php?$query_string;

}

location = /favicon.ico { access_log off; log_not_found off; }

location = /robots.txt { access_log off; log_not_found off; }

error_page 404 /index.php;

location ~ \.php$ {

fastcgi_pass backend:9000;

fastcgi_param SCRIPT_FILENAME $realpath_root$fastcgi_script_name;

include fastcgi_params;

}

location ~ /\.(?!well-known).* {

deny all;

}

}

|

Mind the values for server_name and fastcgi_pass (now pointing to port 9000 of the backend container).

Why not use Laravel Sail? If you're already a Laravel developer, you might have come across Sail, Laravel's official Docker-based development environment. While their approach is similar to this series' in many ways, the main difference is that Sail is meant to cater for Laravel monoliths only. In other words, Sail is meant for applications that are fully encompassed within Laravel, as opposed to applications where the Laravel bit is only one component among others, as is the case in this series.

If you're curious about Laravel Sail, I've published a comprehensive guide that will teach you everything you need to know.

You also need to update your local hosts file with the new domain names (have a quick look here if you've forgotten how to do that):

127.0.0.1 backend.demo.test frontend.demo.test phpmyadmin.demo.test

Note that we also added the frontend's domain to save us a trip later, and that we changed phpMyAdmin's from phpmyadmin.test to phpmyadmin.demo.test for consistency.

You should open the phpmyadmin.conf file in the .docker/nginx/conf.d folder now and update the following line accordingly:

server_name phpmyadmin.demo.test

Now create the src/backend directory and add a Dockerfile with this content:

FROM php:8.1-fpm-alpine

Your file structure should now look like this:

docker-tutorial/

├── .docker/

│ ├── mysql/

│ │ └── my.cnf

│ └── nginx/

│ └── conf.d/

│ └── backend.conf

│ └── phpmyadmin.conf

├── src/

│ └── backend/

│ └── Dockerfile

├── .env

├── .env.example

├── .gitignore

└── docker-compose.yml

Laravel requires a few PHP extensions to function properly, so we need to ensure those are installed. The Alpine version of the PHP image comes with a number of pre-installed extensions which we can list by running the following command (from the project's root, as usual):

$ docker compose run --rm backend php -m

Now if you remember, in the first part of this series we used exec to run Bash on a container, whereas this time we are using run to execute the command we need. What's the difference?

exec simply allows us to execute a command on an already running container, whereas run does so on a new container which is immediately stopped after the command is over. It does not delete the container by default, however; we need to specify --rm after run for it to happen.

The command essentially runs php -m on the backend container, and gives the following result:

[PHP Modules]

Core

ctype

curl

date

dom

fileinfo

filter

ftp

hash

iconv

json

libxml

mbstring

mysqlnd

openssl

pcre

PDO

pdo_sqlite

Phar

posix

readline

Reflection

session

SimpleXML

sodium

SPL

sqlite3

standard

tokenizer

xml

xmlreader

xmlwriter

zlib

[Zend Modules]

That is quite a lot of extensions, which might come as a surprise after reading the previous part praising Alpine images for featuring the bare minimum by default. The reason is also hinted at in the previous article: since it is not always simple to install things on an Alpine distribution, the PHP image's maintainers chose to make their users' lives easier by preinstalling a bunch of extensions.

The final result is around 80 MB, which is still very small.

Now that we know which extensions are missing, we can complete the Dockerfile to install them, along with Composer which is needed for Laravel:

1 2 3 4 5 6 7 | FROM php:8.1-fpm-alpine

# Install extensions

RUN docker-php-ext-install pdo_mysql bcmath

# Install Composer

COPY --from=composer:latest /usr/bin/composer /usr/local/bin/composer

|

Here we're leveraging multi-stage builds (more specifically, using an external image as a stage) to get Composer's latest version from the Composer Docker image directly (we'll talk more about multi-stage builds in part 7).

Non-root user

There's one last thing we need to cover before proceeding further.

First, modify the backend service's build section in docker-compose.yml:

# Backend Service

backend:

build:

context: ./src/backend

args:

HOST_UID: $HOST_UID

working_dir: /var/www/backend

volumes:

- ./src/backend:/var/www/backend

depends_on:

mysql:

condition: service_healthy

The section now comprises a context key pointing to the backend's Dockerfile like before, but also an args section that we'll cover in a minute.

Next, update the backend's Dockerfile again so it looks like this:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 | FROM php:8.1-fpm-alpine

# Install extensions

RUN docker-php-ext-install pdo_mysql bcmath

# Install Composer

COPY --from=composer:latest /usr/bin/composer /usr/local/bin/composer

# Create user based on provided user ID

ARG HOST_UID

RUN adduser --disabled-password --gecos "" --uid $HOST_UID demo

# Switch to that user

USER demo

|

Why do we need to do this?

An important thing to understand is that, unless stated otherwise, containers will use the root user by default.

While this is arguably fine for a local setup, it sometimes leads to permission issues, like when trying to access or update a file on the host machine that was created by the container (which is often the case for the laravel.log file, for instance).

This happens because the user and group IDs used by the container to create the file don't necessarily match that of the host machine, in which case the operation is not permitted (I don't want to linger on this for too long, but I invite you to read this great post to better understand what's going on).

Users of Docker Desktop for Mac typically don't need to worry about this because it's doing some magic under the hood to avoid the problem altogether (unfortunately I couldn't find a proper description of the corresponding mechanism, other than this GitHub issue from 2018 refering to a section of the Docker documentation that doesn't exist anymore).

On the other hand, Linux and WSL 2 users are usually affected, and as the latter is getting more traction, there is suddenly a lot more people facing file permission issues.

So what do the extra Dockerfile instructions do? The first one indicates that there will be a HOST_UID build-time variable available while building the image (once again, I don't want to spend too much time on this, but this excellent article will tell you everything you need to know about Dockerfile variables). The corresponding value will come from the args section we also added to the docker-compose.yml file earlier, which will contain the host machine's current user ID (more on this in a minute).

We then create a user with the same ID in the container, using Alpine's adduser command (please refer to the documentation for details). We name that user demo.

Finally, we tell Docker to switch to that user using the USER instruction, meaning that from then on, demo will be the container's default user.

There's one last thing we need to do, and that is to pass the user ID from the host machine to the docker-compose.yml file.

First, let's find out what this value is by running the following command in a terminal on the host machine:

$ id -u

On macOS for instance, that value might be 501.

Now open the .env file at the root of the project and add the following line to it:

HOST_UID=501

Change the value for the one obtained with the previous command if different. We're done!

With this setup, we now have the guarantee that files shared between the host machine and the containers always belong to the same user ID, no matter which side they were created from. This solution also has the huge advantage of working across operating systems.

I know I went through this quite quickly, but I don't want to drown you in details in the middle of a post which is already quite dense. My advice to you is to bookmark the few URLs I provided in this section and to come back to them once you've completed this tutorial.

How about the group ID? While researching this issue, most of the resources I found also featured the creation of a group with the same ID as the user's group on the host machine. I initially intended to do the same, but it was causing all sorts of conflicts with no easy way to work around them.

That being said, it seems that having the same user ID on both sides is enough to avoid those file permission issues anyway, which lead me to the conclusion that creating a group with the same ID as well was unnecessary. If you think this is a mistake though, please let me know why in the comments.

By the way, that doesn't mean that the user created in the container doesn't belong to any group – if none is specified, adduser will create one with the same ID as the user's by default, and assign it to it.

We now need to rebuild the image to apply the changes:

$ docker compose build backend

Creating a new Laravel project

Once the new version of the image is ready, run the following command:

$ docker compose run --rm backend composer create-project --prefer-dist laravel/laravel tmp "9.*"

This will use the version of Composer installed on the backend container (no need to install Composer locally!) to create a new Laravel 9 project in the container's /var/www/backend/tmp folder.

As per docker-compose.yml, the container's working directory is /var/www/backend, onto which the local folder src/backend was mounted – if you look into that directory now on your local machine, you will find a new tmp folder containing the files of a fresh Laravel application. But why did we not create the project in backend directly?

Behind the scenes, composer create-project performs a git clone, and that won't work if the target directory is not empty. In our case, backend already contains the Dockerfile, which is necessary to run the command in the first place. We essentially created the tmp folder as a temporary home for our project, and we now need to move back the files to their final location:

$ docker compose run --rm backend sh -c "mv -n tmp/.* ./ && mv tmp/* ./ && rm -Rf tmp"

This will run the content between the double quotes on the container, sh -c basically being a trick allowing us to run more than a single command at once (if we ran docker compose run --rm backend mv -n tmp/.* ./ && mv tmp/* ./ && rm -Rf tmp instead, only the first mv instruction would be executed on the container, and the rest would be run on the local machine).

Shouldn't Composer be in its own container? A common way to deal with package managers is to isolate them into their own containers, the main reason being that they are external tools mostly used during development and that they've got no business shipping with the application itself (which is all correct).

I actually used this approach for a while, but it comes with downsides that are often overlooked: as Composer allows developers to specify a package's requirements (necessary PHP extensions, PHP's minimum version, etc.), by default it will check the system on which it installs the application's dependencies to make sure it meets those criteria. In practice, this means the configuration of the container hosting Composer must be as close as possible to the application's, which often means doubling the work for the maintainer. As a result, some people choose to run Composer with the --ignore-platform-reqs flag instead, ensuring dependencies will always install regardless of the system's configuration.

This is a dangerous thing to do: while most of the time dependency-related errors will be spotted during development, in some instances the problem could go unnoticed until someone stumbles upon it, either on staging or even in production (this is especially true if your application does't have full test coverage). Moreover, staged builds are an effective way to separate the package manager from the application in a single Dockerfile, but that's a topic I will broach later in this series. Bear with!

By default, Laravel has created a .env file for you, but let's replace its content with this one (you will find this file under src/backend):

APP_NAME=demo

APP_ENV=local

APP_KEY=base64:BcvoJ6dNU/I32Hg8M8IUc4M5UhGiqPKoZQFR804cEq8=

APP_DEBUG=true

APP_URL=http://backend.demo.test

LOG_CHANNEL=single

DB_CONNECTION=mysql

DB_HOST=mysql

DB_PORT=3306

DB_DATABASE=demo

DB_USERNAME=root

DB_PASSWORD=root

BROADCAST_DRIVER=log

CACHE_DRIVER=file

QUEUE_CONNECTION=sync

SESSION_DRIVER=file

Not much to see here, apart from the database configuration (mind the value of DB_HOST) and some standard application settings.

Let's try out our new setup:

$ docker compose up -d

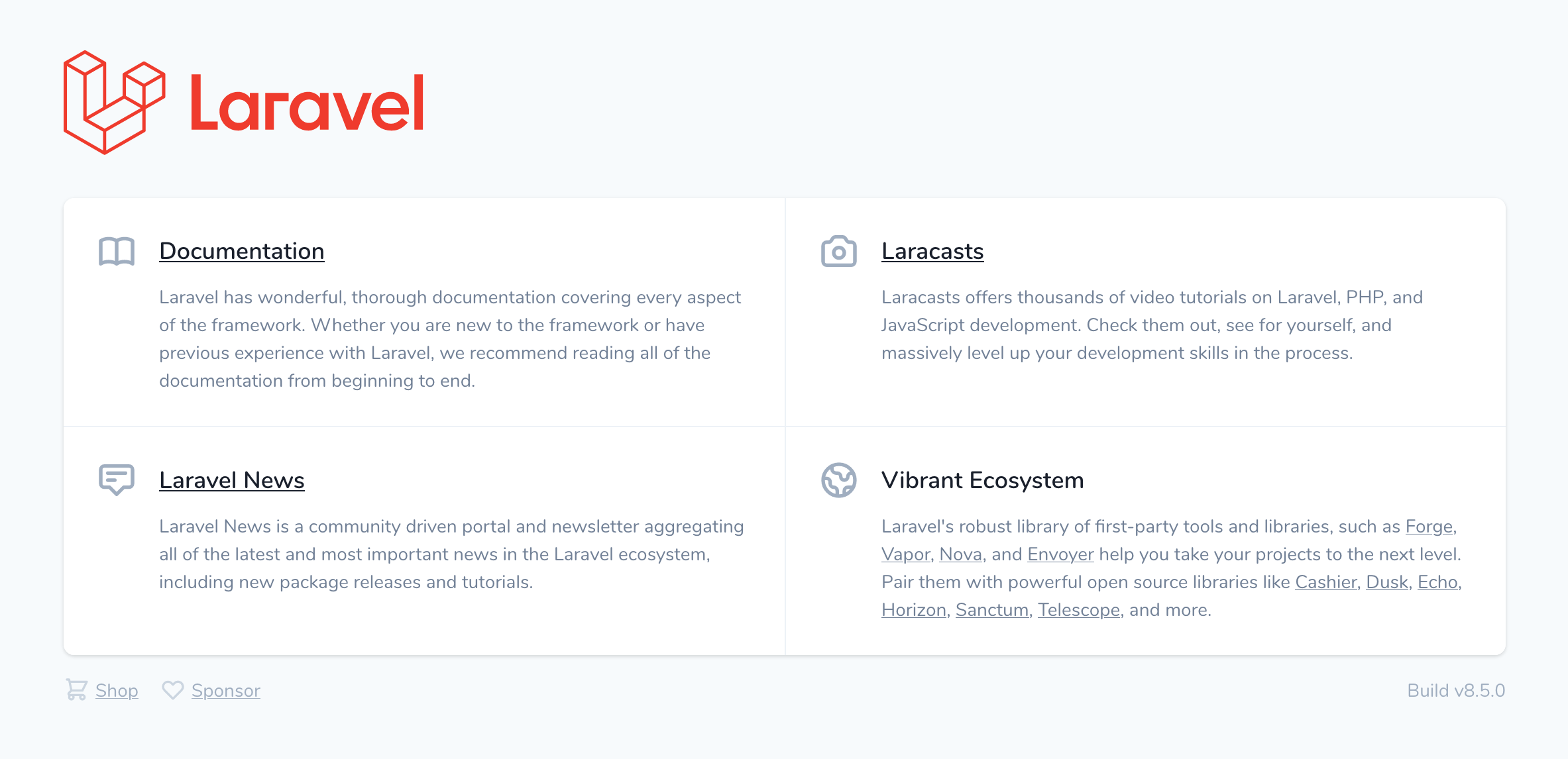

Once it is up, visit backend.demo.test; if all went well, you should see Laravel's home page:

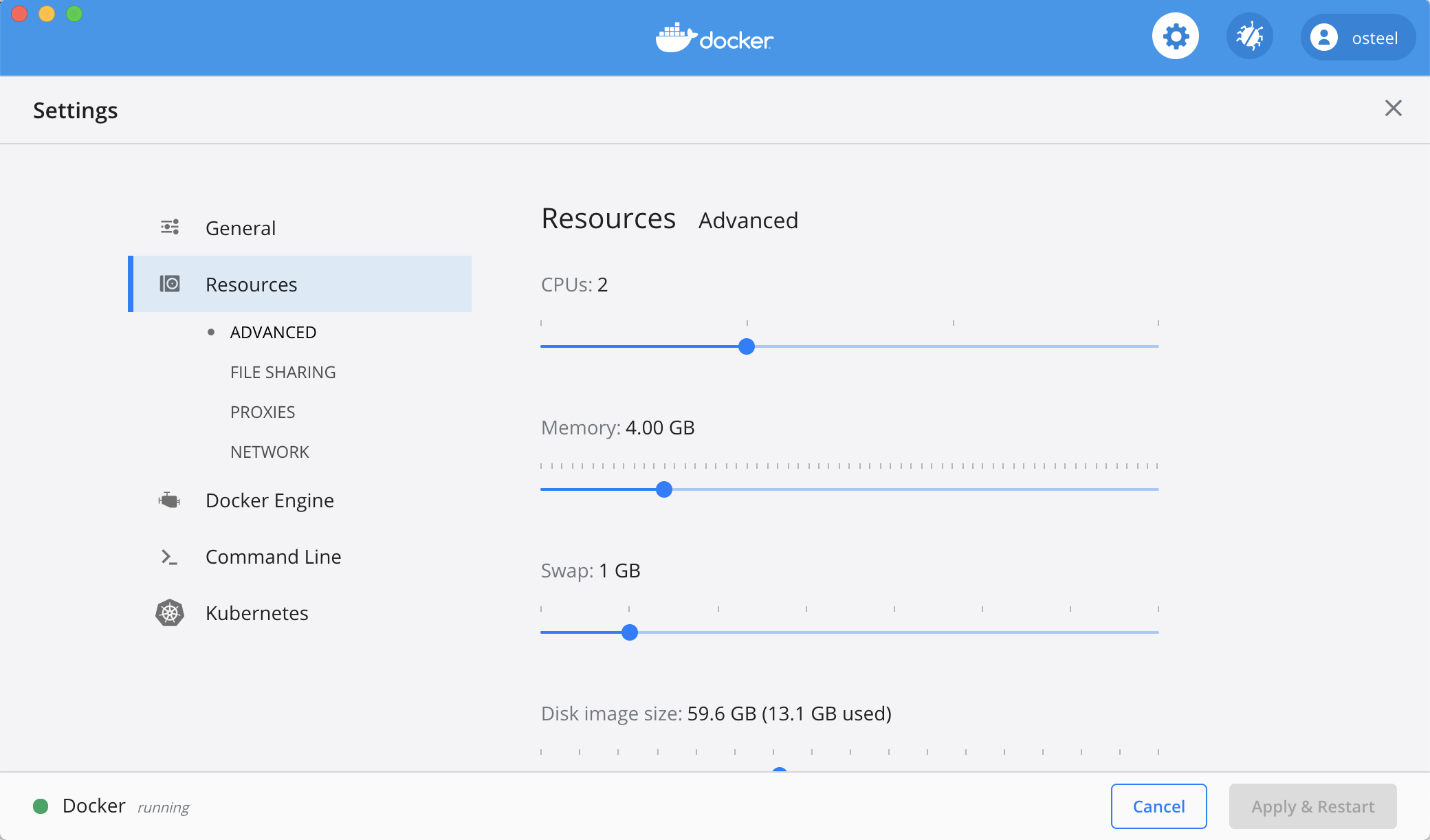

Pump up that RAM! If the backend's response time feels slow, a quick and easy trick for Docker Desktop is to increase the amount of RAM it is allowed to use. Open the preferences and adjust the Memory slider under Resources:

The default value is 2 GB; I doubled that and it made a notable difference (if you've got 8 GB or more, it will most likely not impact your machine's performance in any visible way).

The default value is 2 GB; I doubled that and it made a notable difference (if you've got 8 GB or more, it will most likely not impact your machine's performance in any visible way).

There are other things you could do to try and speed things up on macOS (e.g. Mutagen) but they feel a bit hacky and I'm personally not a big fan of them. If PHP is your language of choice though, make sure to check out the OPcache section below.

And if you're a Windows user and haven't given WSL 2 a try yet, you should probably look into it.

Let's also verify our database settings by running Laravel's default migrations:

$ docker compose exec backend php artisan migrate

You can log into phpmyadmin.demo.test (with the root / root credentials) to confirm the presence of the demo database and its newly created tables (failed_jobs, migrations, password_resets and users).

There is one last thing we need to do before moving on to the frontend application: since our aim is to have it interact with the backend, let's add the following endpoint to routes/api.php:

1 2 3 4 5 | <?php // ignore this line, it's for syntax highlighting only

Route::get('/hello-there', function () {

return 'General Kenobi';

});

|

Try it out by accessing backend.demo.test/api/hello-there, which should display "General Kenobi".

Our API is ready!

We are done for this section but, if you wish to experiment further, while the backend's container is up you can run Artisan and Composer commands like this:

$ docker compose exec backend php artisan

$ docker compose exec backend composer

And if it's not running:

$ docker compose run --rm backend php artisan

$ docker compose run --rm backend composer

Using Xdebug? I don't use it myself, but at this point you might want to add Xdebug to your setup. I won't cover it in detail because this is too PHP-specific, but this tutorial will show you how to make it work with Docker and Docker Compose.

OPcache

You can skip this section if PHP is not the language you intend to use in the backend. If it is though, I strongly recommend you follow these steps because OPcache is a game changer when it comes to local performance, especially on macOS (but it will also improve your experience on other operating systems).

I won't explain it in detail here and will simply quote the official PHP documentation:

OPcache improves PHP performance by storing precompiled script bytecode in shared memory, thereby removing the need for PHP to load and parse scripts on each request.

It's not enabled by default but we can easily do so by following a few steps borrowed from this article (which I invite you to read for more details on the various parameters).

First, we need to introduce a custom configuration for PHP. Create a new .docker folder in src/backend, and add a php.ini file to it with the following content:

1 2 3 4 5 6 7 8 9 | [opcache]

opcache.enable=1

opcache.revalidate_freq=0

opcache.validate_timestamps=1

opcache.max_accelerated_files=10000

opcache.memory_consumption=192

opcache.max_wasted_percentage=10

opcache.interned_strings_buffer=16

opcache.fast_shutdown=1

|

We place this file here and not at the very root of the project because this configuration is specific to the backend application, and we need to reference it from its Dockerfile.

The Dockerfile is indeed where we can import this configuration from, and also where we will install the opcache extension. Replace its content with the following:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 | FROM php:8.1-fpm-alpine

# Install extensions

RUN docker-php-ext-install pdo_mysql bcmath opcache

# Install Composer

COPY --from=composer:latest /usr/bin/composer /usr/local/bin/composer

# Configure PHP

COPY .docker/php.ini $PHP_INI_DIR/conf.d/opcache.ini

# Use the default development configuration

RUN mv $PHP_INI_DIR/php.ini-development $PHP_INI_DIR/php.ini

# Create user based on provided user ID

ARG HOST_UID

RUN adduser --disabled-password --gecos "" --uid $HOST_UID demo

# Switch to that user

USER demo

|

Note that we've added an instruction to copy the php.ini file over to the directory where custom configurations are expected to go in the container, and whose location is given by the $PHP_INI_DIR environment variable. And as we were at it, we also used the default development settings provided by the image's maintainers, which sets up error reporting parameters, among other things (that's what the following RUN instruction is for).

And that's it! Build the image again and restart the containers – you should notice some improvement around the backend's responsiveness:

$ docker compose build backend

$ docker compose up -d

The frontend application

The third tier of our architecture is the frontend application, for which we will use the ever more popular Vue.js.

The steps to set it up are actually quite similar to the backend's; first, let's add the corresponding service to docker-compose.yml, right after the backend one:

1 2 3 4 5 6 7 8 | # Frontend Service

frontend:

build: ./src/frontend

working_dir: /var/www/frontend

volumes:

- ./src/frontend:/var/www/frontend

depends_on:

- backend

|

Nothing that we haven't seen before here. We also need to specify in the Nginx service that the frontend service should be started first:

# Nginx Service

nginx:

image: nginx:1.21-alpine

ports:

- 80:80

volumes:

- ./src/backend:/var/www/backend

- ./.docker/nginx/conf.d:/etc/nginx/conf.d

- phpmyadmindata:/var/www/phpmyadmin

depends_on:

- backend

- phpmyadmin

- frontend

Then, create a new frontend folder under src and add the following Dockerfile to it:

FROM node:17-alpine

We simply pull the Alpine version of Node.js' official image for now, which ships with both Yarn and npm (which are package managers like Composer, but for JavaScript). I will be using Yarn, as I am told this is what the cool kids use nowadays.

Let's build the image:

$ docker compose build frontend

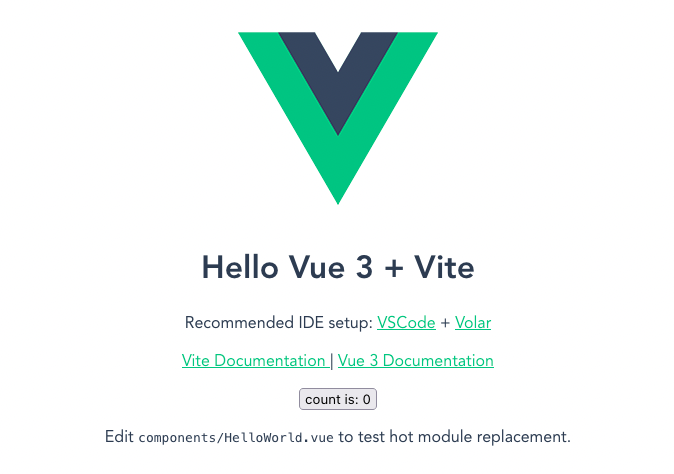

Once the image is ready, create a fresh Vue.js project with the following command:

$ docker compose run --rm frontend yarn create vite tmp --template vue

We're using Vite to create a new project in the tmp directory. This directory is located under /var/www/frontend, which is the container's working directory as per docker-compose.yml.

Why Vite? Vite is a set of frontend tools that was brought to my attention by Brian Parvin in the comments. It brings significant performance gains over Vue CLI around compilation and hot-reloading.

Just like the backend, let's move the files out of tmp and back to the parent directory:

$ docker compose run --rm frontend sh -c "mv -n tmp/.* ./ && mv tmp/* ./ && rm -Rf tmp"

If all went well, you will find the application's files under src/frontend on your local machine.

Finally, let's install the project's dependencies:

$ docker compose run --rm frontend yarn

Issues around node_modules? A few readers have reported symlink issues related to the node_modules folder on Windows. To be honest I am not entirely sure what is going on here, but it seems that creating a .dockerignore file at the root of the project containing the line node_modules fixes it (you might need to delete that folder first). Again, not sure why is that, but you might find answers here.

.dockerignore files are very similar to .gitignore files in that they allow us to specify files and folders that should be ignored when copying or adding content to a container. They are not really necessary in the context of this series and I only touch upon them in the conclusion, but you can read more about them here.

We've already added frontend.demo.test to the hosts file earlier, so let's move on to creating the Nginx server configuration. Add a new frontend.conf file to .docker/nginx/conf.d, with the following content (most of the location block comes from this article):

1 2 3 4 5 6 7 8 9 10 11 12 13 14 | server {

listen 80;

listen [::]:80;

server_name frontend.demo.test;

location / {

proxy_pass http://frontend:8080;

proxy_http_version 1.1;

proxy_set_header Upgrade $http_upgrade;

proxy_set_header Connection 'upgrade';

proxy_cache_bypass $http_upgrade;

proxy_set_header Host $host;

}

}

|

This simple configuration redirects the traffic from the domain name's port 80 to the container's port 8080, which is the port the development server will use.

Let's also update the vite.config.js file in src/frontend:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 | import { defineConfig } from 'vite'

import vue from '@vitejs/plugin-vue'

export default defineConfig({

plugins: [vue()],

server: {

host: true,

hmr: {port: 80},

port: 8080,

watch: {

usePolling: true

}

}

})

|

This will ensure the hot-reload feature is functional. I won't go into details about each option here, as the point is not so much about configuring Vite as seeing how to articulate a frontend and a backend application with Docker, regardless of the chosen frameworks. You are welcome to browse the documentation though!

Let's complete our Dockerfile by adding the command that will start the development server:

1 2 3 4 | FROM node:17-alpine

# Start application

CMD ["yarn", "dev"]

|

Rebuild the image:

$ docker compose build frontend

And start the project so Docker picks up the image changes (for some reason, the restart command won't do that):

$ docker compose up -d

Once everything is up and running, access frontend.demo.test and you should see this welcome page:

If you don't, take a look at the container's logs to see what's going on:

$ docker compose logs -f frontend

Open src/frontend/src/components/HelloWorld.vue and update some content (the <h1> tag, for example). Go back to your browser and you should see the change happen in real time – this is hot-reload doing its magic!

To make sure our setup is complete, all we've got left to do is to query the API endpoint we defined earlier in the backend, with the help of Axios. Let's install the package with the following command:

$ docker compose exec frontend yarn add axios

Once Yarn is done, replace the content of src/frontend/src/App.vue with this one:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 | <template>

<div id="app">

<HelloThere :msg="msg"/>

</div>

</template>

<script>

import axios from 'axios'

import HelloThere from './components/HelloThere.vue'

export default {

name: 'App',

components: {

HelloThere

},

data () {

return {

msg: null

}

},

mounted () {

axios

.get('http://backend.demo.test/api/hello-there')

.then(response => (this.msg = response.data))

}

}

</script>

|

All we are doing here is hitting the hello-there endpoint we created earlier and assigning its response to the msg property, which is passed on to the HelloThere component. Once again I won't linger too much on this, as this is not a Vue.js tutorial – I merely use it as an example.

Delete src/frontend/src/components/HelloWorld.vue, and create a new HelloThere.vue file in its place:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 | <template>

<div>

<img src="https://tech.osteel.me/images/2020/03/04/hello.gif" alt="Hello there" class="center">

<p>{{ msg }}</p>

</div>

</template>

<script>

export default {

name: 'HelloThere',

props: {

msg: String

}

}

</script>

<style>

p {

font-family: "Arial", sans-serif;

font-size: 90px;

text-align: center;

font-weight: bold;

}

.center {

display: block;

margin-left: auto;

margin-right: auto;

width: 50%;

}

</style>

|

The component contains a little bit of HTML and CSS code, and displays the value of msg in a <p> tag.

Save the file and go back to your browser: the content of our API endpoint's response should now display at the bottom of the page.

If you want to experiment further, while the frontend's container is up you can run Yarn commands like this:

$ docker compose exec frontend yarn

And if it's not running:

$ docker compose run --rm frontend yarn

Using separate repositories As I mentioned at the beginning of this article, in a real-life situation the frontend and backend applications are likely to be in their own repositories, and the Docker environment in a third one. How to articulate the three of them?

The way I do it is by adding the src folder to the .gitignore file at the root, and I checkout both the frontend and backend applications in it, in separate directories. And that's about it! Since src is git-ignored, you can safely checkout other codebases in it, without conflicts. Theoretically, you could also use Git submodules to achieve this, but in practice it adds little to no value, especially when applying what we'll cover in the next part.

Conclusion

That was another long one, well done if you made it this far!

This article once again underscores the fact that, when it comes to building such an environment, a lot is left to the maintainer's discretion. There is seldom any clear way of doing things with Docker, which is both a strength and a weakness – a somewhat overwhelming flexibility. These little detours contribute to making these articles dense, but I think it is important for you to know that you are allowed to question the way things are done.

On the same note, you might also start to wonder about the practicality of such an environment, with the numerous commands and syntaxes one needs to remember to navigate it properly. And you would be right. That is why the next article will be about using Bash to abstract away some of that complexity, to introduce a nicer, more user-friendly interface in its place.

You can subscribe to email alerts below to make sure you don't miss it, or you can also follow me on Twitter where I will share my posts as soon as they are published.

You can also subscribe to the RSS or Atom feed, or follow me on Twitter.